One step forward, two steps back in Online Harms bill

What do pornography and hate speech have in common? Well, the federal government says they are both harmful. That’s why they’ve wrapped these issues up together in their recently announced Online Harms Act, otherwise known as Bill C-63.

As the government’s news release stated, “Online harms have real world impact with tragic, even fatal, consequences.” As such, the government is of the mind that the responsibility for regulating all sorts of online harm falls to them. But the approach of the government in Bill C-63, though it contains some good content, is inadequate.

BACKGROUND

In June 2021, the federal government introduced hate speech legislation focused on hate propaganda, hate crime, and hate speech. The bill was widely criticized, including in ARPA Canada’s analysis, and failed to advance prior to the fall 2021 election. Nonetheless, the Liberal party campaigned in part on a promise to bring forward similar legislation within 100 days of re-election.

Over two years have passed since the last federal election. In the meantime, the government pursued a consultation and an expert panel on the topic of online harms. Based on these and feedback from stakeholders, the government has now tabled legislation combatting online harm more broadly. Bill C-63 defines seven types of “harmful content”:

a) intimate content communicated without consent;

b) content that sexually victimizes a child or revictimizes a survivor;

c) content that induces a child to harm themselves;

d) content used to bully a child;

e) content that foments hatred;

f) content that incites violence; and

g) content that incites violent extremism or terrorism.

The hate speech elements of Bill C-63 are problematic for Canadians’ freedom of expression. We will address those further on.

But though the bill could be improved, it is a step in the right direction on the issue of child sexual exploitation.

DIGITAL SAFETY OVERSIGHT

If passed, part 1 of the Online Harms Act will create a new Digital Safety Commission to help develop online safety standards, promote online safety, and administer and enforce the Online Harms Act. A Digital Safety Ombudsperson will also be appointed to advocate for and support online users. The Commission will hold online providers accountable and, along with the Ombudsperson, provide an avenue for victims of online harm to bring forward complaints. Finally, a Digital Safety Office will be established to support the Commission and Ombudsperson.

The Commission and Ombudsperson will have a mandate to address any of the seven categories of harm listed above. But their primary focus, according to the bill, will be “content that sexually victimizes a child or revictimizes a survivor” and “intimate content communicated without consent.” Users can submit complaints or make other submissions about harmful content online, and the Commission is given power to investigate and issue compliance orders where necessary.

Social media services are the primary target of the Online Harms Act. The Act defines “social media service” as:

“a website or application that is accessible in Canada, the primary purpose of which is to facilitate interprovincial or international online communication among users of the website or application by enabling them to access and share content.”

Further clarification is provided to include:

an adult content service, namely a social media service that is focused on enabling its users to access and share pornographic content; and

a live streaming service, namely a social media service that is focused on enabling its users to access and share content by live stream.

Oversight will be based on the size of a social media service, including the number of users. So, at the very least, the Digital Safety Commission will regulate online harm not only on major social media sites including Facebook, X, and Instagram, but also on pornography sites and live streaming services.

Some specifics are provided in Bill C-63, but the bill would grant the government broad powers to enact regulations to supplement the Act. The bill itself is unclear regarding the extent to which the Commission will address online harm besides pornography, such as hate speech. What we do know is that the Digital Safety Commission and Ombudsman will oversee the removal of “online harms” but will not punish individuals who post or share harmful content.

DUTIES OF OPERATORS

Three duties laid out in Bill C-63 apply to any operator of a regulated social media service – for example, Facebook or Pornhub. The Act lists three overarching duties that operators of social media services must adhere to.

1. Duty to act responsibly

The duty to act responsibly includes:

mitigating risks of exposure to harmful content,

implementing tools that allow users to flag harmful content,

designating an employee as a resource for users of the service,

and ensuring that a digital safety plan is prepared.

This duty relates to harmful content broadly. Although each category of “harmful content” is defined further in the Act, the operator is responsible to determine whether the content is harmful.

While it’s important for the Commission to remove illegal pornography, challenges may arise with the Commission seeking to remove speech that a user has flagged as harmful.

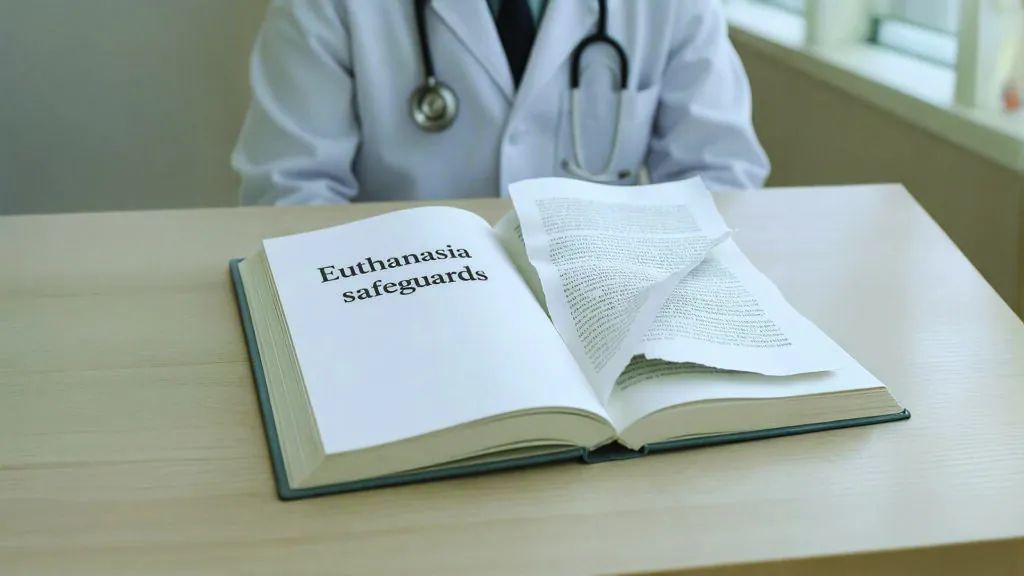

2. Duty to protect children

The meaning of the duty to protect children is not clearly defined. The bill notes that:

“an operator must integrate into a regulated service that it operates any design features respecting the protection of children, such as age-appropriate design, that are provided for by regulations.”

This could refer to age-appropriate designs in the sense that children are not drawn into harmful content; it could refer to warning labels on pornography sites, or it could potentially require some level of age-verification for children to access harmful content. These regulations, however, will be established by the Commission following the passage of the Online Harms Act.

The Liberal government says that its Online Harms Act makes Bill S-210 unnecessary. Bill S-210 would require age-verification for access to online pornography. In its current form, however, the Online Harms Act does nothing to directly restrict minors’ access to pornography. It would allow minors to flag content as harmful and requires “age-appropriate design” but would not require pornography sites to refuse access to youth. As such, ARPA will continue to advocate for the passage of Bill S-210 to restrict access to pornography and hold pornography sites accountable.

3. Duty to make certain content inaccessible

Finally, Bill C-63 will make social media companies responsible for making certain content inaccessible on their platforms. This section is primarily focused on content that sexually victimizes a child or revictimizes a survivor and intimate content communicated without consent. ARPA has lauded provincial efforts in British Columbia and Manitoba to crack down on such content in the past year. If such content is flagged on a site and deemed to be harmful, the operators must make it inaccessible within 24 hours and keep it inaccessible.

In 2020, Pornhub was credibly accused of hosting videos featuring minors. Additionally, many women noted that they had requested Pornhub to remove non-consensual videos of themselves and that Pornhub had failed to do so.

At the time, ARPA Canada submitted a brief to the Committee studying sexual exploitation on Pornhub. Our first recommendation was that pornography platforms must be required to verify age and consent before uploading content. Second, we recommended that victims must have means for immediate legal recourse to have content removed from the internet. This duty to make content inaccessible will provide some recourse for victims to flag content and have it removed quickly. Further, the Commission will provide accountability to ensure the removal of certain content and that it remains inaccessible.

The Act creates a new bureaucratic agency for this purpose rather than holding companies accountable through the Criminal Code. The Criminal Code is arguably a stronger deterrent. For example, Bill C-270, scheduled for second reading in the House of Commons in April 2024, would make it a criminal offence to create or distribute pornographic material without first confirming that any person depicted was over 18 years of age and gave express consent to the content. Bill C-270 would amend the Criminal Code to further protect vulnerable people. Instead of criminal penalties, the Online Harms Act would institute financial penalties for failure to comply with the legislation.

Of course, given the sheer volume of online traffic and social media content and the procedural demands of enforcing criminal laws, a strong argument can be made that criminal prohibitions alone are insufficient to deal with the problem. But if new government agencies with oversight powers are to be established, it’s crucial that the limits of their powers are clearly and carefully defined and that they are held accountable to them.

THE GOOD NEWS…

This first part of the Online Harms Act contains some important attempts to combat online pornography and child sexual exploitation. As Reformed Christians, we understand that a lot of people are using online platforms to promote things that are a direct violation of God’s intention for flourishing in human relationships.

This bill certainly doesn’t correct all those wrongs, but it at least recognizes that there is improvement needed for how these platforms are used to ensure vulnerable Canadians are protected. Most Canadians support requiring social media companies to remove child pornography or non-consensual pornography. In a largely unregulated internet, many Canadians also support holding social media companies accountable for such content, especially companies that profit from pornography and sexual exploitation. Bill C-63 is the government’s attempt to bring some regulation to this area.

… AND NOW THE BAD NEWS

But while some of the problems addressed through the bill are objectively harmful, how do we avoid subjective definitions of harm? Bill C-63 raises serious questions about freedom of expression.

Free speech is foundational to democracy. In Canada, it is one of our fundamental freedoms under section 2 of the Charter. Attempts to curtail speech in any way are often seen as an assault on liberty. Bill C-63 would amend the Criminal Code and the Canadian Human Rights Act to combat hate speech online. But the bill gives too much discretion to government actors to decide what constitutes hate speech.

HARSHER FOR “HATE SPEECH” CRIMES

The Criminal Code has several offences that fall under the colloquial term “hate speech.” The Code prohibits advocating genocide, publicly inciting hatred that is likely to lead to a breach of the peace, or willfully promoting hatred or antisemitism. The latter offence is potentially broader, but it also provides several defenses, including:

the statement was true

the statement was a good faith attempt to argue a religious view

the statement was about an important public issue meriting discussion and the person reasonably believed the statement was true

Bill C-63 would increase the maximum penalties for advocating genocide and inciting or promoting hatred or antisemitism. The maximum penalty for advocating genocide would increase to life in prison instead of five years. The bill would also raise the penalty for publicly inciting hatred or promoting hatred or antisemitism to five years instead of the current two.

Bill C-63 defines “hatred” as “the emotion that involves detestation or vilification and that is stronger than disdain or dislike.” It also clarifies that a statement does not incite or promote hatred “solely because it discredits, humiliates, hurts or offends.” This clarification is better than nothing, but it inevitably relies on judges to determine the line between statements that are merely offensive or humiliating and those that generate emotions of vilification and detestation.

ARPA Canada recently intervened in a criminal hate speech case involving Bill Whatcott. Whatcott was charged with criminal hate speech for handing out flyers at a pride parade warning about the health risks of engaging in homosexual relations. Prosecutors argued that Whatcott was promoting hatred against an identifiable group by condemning homosexual conduct. This is an example of a person being accused of hate speech for expressing his beliefs – his manner of expressing those beliefs, but also the content of his beliefs.

NEW STAND-ALONE HATE CRIME OFFENCE

The Criminal Code already makes hatred a factor in sentencing. So, for example, if you assault someone and there is conclusive evidence that your assault was motivated by racial hatred, that “aggravating factor” will likely mean a harsher sentence for you. But the offence is still assault, and the maximum penalties for assault still apply.

Bill C-63, however, would add a new hate crime offence – any offence motivated by hatred – to the Criminal Code, and it may be punishable by life in prison.

It would mean that any crime found to be motivated by hatred would count as two crimes. Consider an act of vandalism, for example. The crime of mischief (which includes damaging property) has a maximum penalty of 10 years. But, if you damaged property because of hatred toward a group defined by race, religion, or sexuality, you could face an additional criminal charge and potentially life in prison.

ANTICIPATORY HATE CRIMES?

Bill C-63 would permit a person to bring evidence before a court based on fear that someone will commit hate speech or a hate crime in the future. The court may then order the accused to “keep the peace and be of good behavior” for up to 12 months and subject that person to conditions including wearing an electronic monitoring device, curfews, house arrest, or abstaining from consuming drugs or alcohol.

There are other circumstances in which people can go to court for fear that a crime will be committed – for example, if you have reason to believe that someone will damage your property, or cause you injury, or commit terrorism. However, challenges with unclear or subjective definitions of hatred will only be accentuated when determining if someone will commit hate speech or a hate crime.

BRINGING BACK SECTION 13

This is the first time the government has tried to regulate hate speech. The former section 13 of the Canada Human Rights Act prohibited online communications that were “likely to expose a person or persons to hatred or contempt” on the basis of their race, religion, sexuality, etc.

As noted by Joseph Brean in the National Post, section 13 was passed in 1977, mainly in response to telephone hotlines that played racist messages. From there, the restrictions around hate speech were extended to the internet (telecommunications, including internet, falls under federal jurisdiction) until Parliament repealed section 13 in 2013. Joseph Brean writes that section 13 “was basically only ever used by one complainant, a lawyer named Richard Warman, who targeted white supremacists and neo-Nazis and never lost.” In fact, Warman brought forward 16 hate speech cases and won them all.

A catalyst for the controversy over human rights hate speech provisions was a case involving journalist Ezra Levant. Levant faced a human rights complaint for publishing Danish cartoons of Muhammad in 2006. In response to being charged, Levant published a video of an interview with an investigator from the Alberta Human Rights Commission. Then in 2007, a complaint was brought against Maclean’s magazine for publishing an article by Mark Steyn that was critical of Islam.

Such stories brought section 13 to public attention and revealed how human rights law was being used to quash officially disapproved political views.

Bill C-63 would bring back a slightly revised section 13. The new section 13 states:

“It is a discriminatory practice to communicate or cause to be communicated hate speech by means of the Internet or any other means of telecommunication in a context in which the hate speech is likely to foment detestation or vilification of an individual or group of individuals on the basis of a prohibited ground of discrimination.”

A few exceptions apply. For example, this section would not apply to private communication or to social media services that are simply hosting content posted and shared by users. So, for example, if someone wanted to bring a complaint about an ARPA post on Facebook, that complaint could be brought against ARPA, but not against Facebook.

If a person is found guilty of hate speech, the Human Rights Tribunal may order the offender to pay up to $20,000 to the victim, and up to $50,000 to the government. This possibility of financial benefit incentivizes people to bring forward hate speech complaints.

British Columbia has a similar hate speech provision in its Human Rights Code. ARPA wrote about how that provision was interpreted and enforced to punish someone for saying that a “trans woman” is really a man. The Tribunal condemned a flyer in that case for “communicat rejection of diversity in the individual self-fulfillment of living in accordance with one’s own gender identity.”

The Tribunal went on to reject the argument that the flyer was not intended to promote hatred or discrimination, “but only to ‘bring attention to what views as immoral behaviour, based on his religious belief as a Christian’.” Ultimately, the Tribunal argued that there was no difference between promoting hatred and bringing attention to what the defendant viewed as immoral behavior.

NO DEFENSES FOR CHRISTIANS?

As noted above, when it comes to the Criminal Code’s hate speech offences, there are several defenses available (truth, expressing a religious belief, and advancing public debate). These are important defenses that allow Canadians to say what they believe to be true and to express sincere religious beliefs.

But the Canadian Human Rights Act offers no defenses. And complaints of hate speech in human rights law are far easier to bring and to prosecute than criminal charges. Criminal law requires proof beyond reasonable doubt. But under the Human Rights Act, statements that are likely (i.e. 51% chance, in Tribunal’s view) to cause detestation or vilification will be punishable. So, hate speech would be regulated in two different places, the Criminal Code and the Human Rights Act, the latter offering fewer procedural rights and a lower standard of proof.

Bill C-63 clarifies that a statement is not detestation or vilification “solely because it expresses disdain or dislike or it discredits, humiliates, hurts or offends.” But again, the line between dislike and detestation is unclear. Human rights complaints are commonly submitted because of humiliation or offence, rather than any clear connection to detestation or vilification.

Section 13 leaves too much room for subjective and ideologically motivated interpretations of what constitutes hate speech. The ideological bias that often manifests is a critical theory lens, which sees “privileged” groups like Christians as capable only of being oppressors/haters, while others are seen as “equity-seeking” groups.

For example, in a 2003 case called Johnson v. Music World Ltd., a complaint was made against the writer of a song called “Kill the Christian.” A sample:

Armies of darkness unite

Destroy their temples and churches with fire

Where in this world will you hide

Sentenced to death, the anointment of christ

Put you out of your misery

The death of prediction

Kill the christian

Kill the christian…dead!

The Tribunal noted that the content and tone appeared to be hateful. However, because the Tribunal thought Christians were not a vulnerable group, it decided this was not hate speech.

By contrast, in a 2008 case called Lund v. Boissoin, a panel deemed a letter to the editor of a newspaper that was critical of homosexuality to be hate speech. The chair of the panel was the same person in both Johnson and Lund.

Hate speech provisions are potentially problematic for Christians who seek to speak truth about various issues in our society. Think about conversion therapy laws that ban talking about biblical gender and sexuality in some settings, or bubble zone laws that prevent pro-life expression in designated areas. But beyond that, freedom of speech is also important for those with whom we may disagree. It is important to be able to have public dialogue on various public issues.

GOVERNMENT’S ROLE IN REGULATING SPEECH

This all raises serious questions about whether the government should be regulating “hate speech” at all. After all, hate speech provisions in the Human Rights Act or the Criminal Code have led and could lead to inappropriate censorship. But government also has a legitimate role to play in protecting citizens from harm.

1. Reputational harm and safety from threats of violence

Arguably the government’s role in protecting citizens from harm includes reputational harm. Imagine someone was spreading accusations in your town that everyone in your church practices child abuse, for example. That is an attack on your reputation as a group and as individual members of the group – which is damaging and could lead to other harms, possibly even violence. Speech can do real damage.

But Jeremy Waldron, a prominent legal philosopher and a Christian, suggests that the best way to think about and enforce “hate speech” laws is as a prohibition on defaming or libeling a group, similar to how our law has long punished defaming or libeling an individual. Such a conception may help to rein in the scope of what we call “hate speech,” placing the focus on demonstrably false and damaging accusations, rather than on controversial points of view on matters relating to religion or sexuality, for example.

Hatred is a sin against the 6th commandment, but the government cannot regulate or criminalize emotions per se or expressions of them, except insofar as they are expressed in and through criminal acts or by encouraging others to commit criminal acts. That’s why we rightly have provisions against advocating or inciting terrorism or genocide, or counseling or encouraging someone to commit assault, murder, or any other crime.

When the law fails to set an objective standard, however, it is open to abuse – for example, by finding a biblical view of gender and sexuality to constitute hate speech. Regrettably, Bill C-63 opens up more room for subjectivity and ideologically based restrictions on speech. It does nothing to address the troubling interpretations of “hate speech” that we’ve seen in many cases in the past. And, by putting hate speech back into the Human Rights Act, the bill makes many more such abuses possible. We suspect it will result in restricting speech that is culturally unacceptable rather than objectively harmful.

2. Harm of pornography

As discussed earlier, Bill C-63 does introduce some good restrictions when it comes to online pornography. In our view, laws restricting pornography are categorically different from laws restricting “hate speech,” because the former laws are not designed to or in danger of being applied to censor beliefs, opinions, or arguments. Restricting illegal pornography prevents objectively demonstrable harm. Pornography takes acts that ought to express love and marital union and displays them for consumption and the gratification of others. Much of it depicts degrading or violent behavior. Pornography’s harms, especially to children, are well documented.

The argument is often made that pornography laws risk censoring artistic expression involving sexuality or nudity. But Canada is very far, both culturally and legally, from censoring art for that reason – and Bill C-63 wouldn’t do so. Its objectives as they relate to pornography are mainly to reduce the amount of child pornography and non-consensual pornography easily available online.

CONCLUSION

While the Online Harms Act contains some good elements aimed at combatting online pornography, its proposed hate speech provisions are worrisome. Unfortunately, the federal government chose to deal with both issues in one piece of legislation – this should have been two separate bills.

As Bill C-63 begins to progress through the House of Commons, we can continue to support Bills S-210 and C-270, private members’ bills which combat the online harms of pornography. Meanwhile, head to ARPACanada.org for action items related to the Online Harms Act. ...