Lots of potential, but the output is only as valuable as the input

*****

About a year ago, the research firm OpenAI made a version of its text-generation tools available for free use from its website. The chatbot, called ChatGPT, accepts a prompt from the user, such as a question or a request for a piece of writing, and responds with a seemingly original composition.

If you have experimented with this tool, you may be impressed with its ability to produce natural-sounding English paragraphs – or perhaps you find it eerie and wonder what changes tools like this will bring.

How large language models work

To demystify what language tools like ChatGPT are doing, here’s a game you can try at home. To play, you’ll need to select a book and pick a letter of the alphabet. I’ll play along while I write.

A) One letter

I have a copy of Pilgrim’s Progress handy, and I choose the letter m. Open your book at random and find the first word on that page that contains your letter. For me, the word is mind. In the word that you found, what letter occurs immediately after the letter you were looking for, just as i comes next in mind ? That letter is your new letter.

Now flip to a new page and repeat. The first i that I see is in the word it, so that means t is my next letter. Do it again. On the next page that I turn to I see t in the word delectable, so a is my next letter. So far my letters have been m-i-t-a.

On my next turn, I find a in about and take the letter b. I then find b in Beelzebub and take the letter e. Finally, I find e in the word be, and since it’s followed by a space rather than another letter, this ends the game.

Looking back, the letters of the game make the word mitabe. That’s not a real word, of course, but it’s not completely random either. The letter-level statistics of the English language led this process to make something that looks more English-like than we are likely to have gotten by grabbing six tiles out of a bag of Bananagrams.

B) Two letters

This game becomes more interesting if we level it up. Instead of looking for the first occurrence of our most recent letter, what if we searched for the first time our last two letters occur together?

If my last two letters were be, then I would need to a look for a word like become and take c as my next letter. The effect is that we are using a larger context as we string out our sequence of letters.

This version of the game is tedious to do by hand, but we can automate it using a computer. Drawing from the text of Pilgrim’s Progress and using two letters of context, an algorithm replicating this game generates the text:

SETTERE HEY IST ING TO WAS NOR HOUT SAY SUPOSTIANY

If we sift through the gibberish, we find five real English words – and the rest are at least pronounceable.

C) Four letters

If we try again, but this time with a context of four letters, we get:

WOULD BUT THIS ONE COUNTRY AND SMOKE TO HAD KEPT

Although this is nonsense, all the words are real, and shorter strings of words could make sense together.

D) Nine letters

Finally, if we level up the game to use nine characters of context, sentences begin to take shape:

SO CHRISTIAN AND HOPEFUL I PERCEIVE IS AGAINST A WALL

Grammar aside, this is remarkably close to real English.

When it gets bigger still

To get a layman’s understanding of how technology like ChatGPT works, imagine a great leveling-up of this game. Instead of being played on one laptop, it is run on giant server farms of leading tech companies. Instead of using Pilgrim’s Progress as a source text, it is trained on humankind’s entire digitized corpus. Instead of using a few letters of context, it uses a sophisticated model of human language. And instead of using a starting letter, the process is induced by a question or request from a user.

When you enter a prompt into ChatGPT, you are really asking a statistical question: Based on information gathered from innumerable digital texts, what is the most likely response to this prompt?

I tried giving ChatGPT the prompt: “Versify the first question and answer of the Heidelberg Catechism into common meter so that it could be sung by a congregation.” It responded with:

In whom is all my trust and joy,

My comfort and salvation?

In Jesus Christ, the sinless One,

The source of my redemption.

My only comfort here on Earth,

My only hope in heaven,

Is that I am not my own, but His,

For all my sins are forgiven.

The meter is forced, but one has to admit that many would-be hymn writers have done worse.

Benefits and risks of text-generators

ChatGPT and similar tools that generate text or images are one example of advances in technology that use algorithms built from data rather than being human-handcrafted. You probably have used some of them: data-generated algorithms can identify faces in photographs, control autonomous vehicles, make medical diagnoses, and detect fraudulent transactions.

One effect of this trend is that the technologies become more difficult to understand, even for experts, since the tools are often shaped by deep patterns in the training data that are beyond human perception. They exude something of a magical quality, especially when they are presented with evocative terms like artificial intelligence.

Yet it is important for Christians not to attribute anything magical to unfamiliar technologies. Even without precise expertise in trending technology, we still can develop an informed awareness of the benefits and risks.

1) Output only as good as the input

For one, technology generated from data is only as good as the data it is generated from. A tool like ChatGPT reflects the attributes of the texts that it is trained on.

I gave ChatGPT the prompt, “Explain how the Auburn Affirmation affected the career of J. Gresham Machen” [Editor’s note: Machen is one of the founders of the OPC denomination]. It responded with a page and a half of text that got the basic facts right and read like an answer on an essay test.

But what stood out to me was the uniformly positive terms it used when referring to Machen. He was a “staunch defender of conservative, orthodox Christianity,” to which he had “unwavering commitment,” making a “courageous stand against the Auburn Affirmation” and founding the OPC, “where he could continue to champion conservative Reformed theology.”

No doubt readers will be sympathetic to this portrayal. But it is worth asking why ChatGPT would give Machen heroic verbs and adjectives while describing the proponents of the Auburn Affirmation in dry, factual terms. My speculation is that people interested enough in Machen to write about him have tended to be his admirers, and so ChatGPT is imitating the dominant sentiment in material about Machen.

But does this pass the shoe-on-the-other-foot test? What would ChatGPT produce if we gave it a prompt for which most of its source material was written by enemies of the gospel?

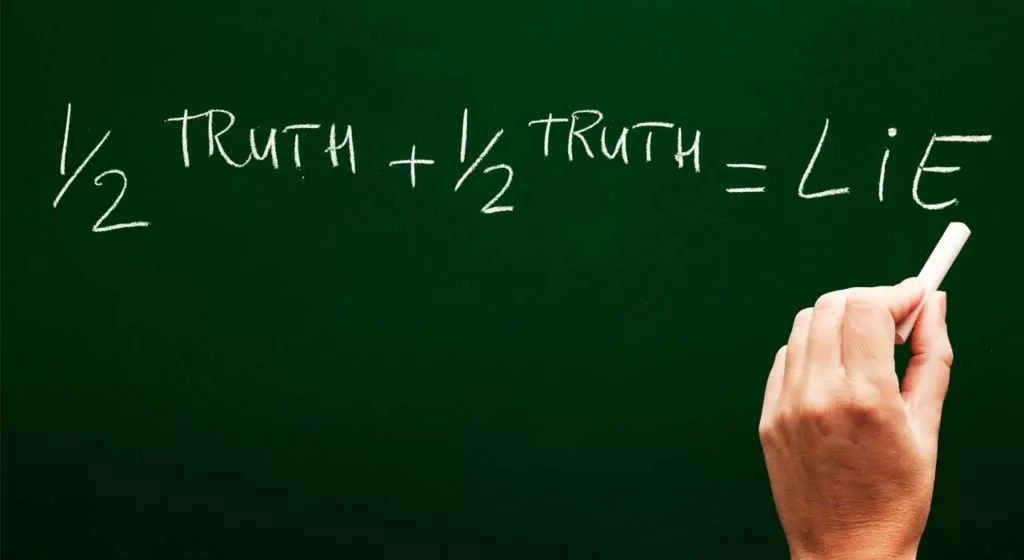

2) Truth isn’t a particular priority

Furthermore, the fact that these tools produce the most probable response to a prompt, based on the data they are trained on, means that truth itself is not a particular priority. I once asked ChatGPT who was the most famous person to play against the eighteenth-century chess-playing automaton known as the Turk (actually an elaborate hoax). The response mentioned Napoleon Bonaparte and Benjamin Franklin – each of whom did face off against the Turk at one point – but it went on to claim that “one of the most famous opponents of the Turk was the Austrian composer Wolfgang Amadeus Mozart,” describing their chess match in dramatic detail. This appears to have been synthesized whole cloth – I can’t trace down any verification that Mozart ever encountered the Turk.

One of my colleagues at Wheaton College, where I teach, described how he caught a student passing off a ChatGPT-generated term paper as his own work. The paper was more-or-less B+ quality, and its bibliography had respectable-looking citations. As it turned out, though, ChatGPT had made the citations up: the claimed authors were real scholars and the journals were real, but the articles themselves didn’t exist. This is what we can expect when the most probable response is not the most truthful response.

3) Temptation to students

This serves to highlight the most obvious risk induced by ChatGPT and similar tools: it provides a new and perfectly convenient way to plagiarize. A student – or a professional writer, for that matter – can whip out a paper in seconds by giving a prompt to a free online tool.

At present, teachers can use services that detect AI-origin of text (itself an application of data-driven algorithms), but we can expect that successive generations of text-producing tools will prove to be better at eluding detection. One can imagine an arms race ensuing between text generators and generated-text detectors, with teachers never being able to trust their students again. And what of the rights of the original authors whose work is used to train these tools?

4) Do away with drudgery

On the other hand, it is all too easy to bemoan the potential harms in a new technology and to overlook how it can be used for good. Tools like ChatGPT can be used not only as a cheat for writing, but also as writing aides: You can feed it a paragraph you have written and ask it to polish it up – make it more formal, or less formal, or more succinct, or with a more varied vocabulary. It can act, in a way, as a smart thesaurus.

Another colleague of mine told of a student who defended the use of ChatGPT in writing a paper as a natural progression from tools that are already accepted: If we use spellcheck to eliminate spelling errors and Grammarly to fix syntax, then isn’t it only wise to use the latest tools to improve our rhetoric as well?

One may quibble with this student’s logic, but technological changes throughout history have automated menial tasks, allowing humans to focus on things that are more meaningful. After all, some writing tasks are so much drudgery. You do not want your pastor using ChatGPT to write a sermon, but perhaps you wouldn’t begrudge a businessperson using an automated writing tool that can turn sales data into a quarterly report.

A Christian response

Text-generation tools are part of a suite of data-derived technologies that have gotten much media attention and that seem to have the power to change society as much as the internet and smartphones have done, if not more.

How should we live in light of advancements in science and technology? There are many ways Christians should not respond: we shouldn’t idolize technology or make a false gospel out of it – we should not share the world’s fascination for the next new thing or hope for scientific deliverance from life’s problems. But neither should we approach it with fear or regard it with superstition.

I also would argue that it’s irresponsible to ignore it.

First, to any extent that we use tools like ChatGPT, we should see to it that we work with complete integrity. This is in the spirit of Paul’s exhortation to Timothy that in his ministry of the Word he should be “a worker who has no need to be ashamed” (2 Tim. 2:15) – whatever our calling, our work should be worthy of approval. Students at any level of schooling should note their school’s and teachers’ policy on writing assistants and follow them scrupulously.

The Christian school that my children attend has ruled that no use of ChatGPT is appropriate for any of their schoolwork, and, with admirable consistency, they have banned the use of Grammarly as well. Some of my colleagues have experimented with allowing limited use of ChatGPT as a writing aide in college courses, but with clearly-defined boundaries, including that students must cite to what extent ChatGPT was used. For any professional or personal use of these tools as writing aides, we should ask ourselves whether we are presenting our work honestly.

Second, we should exercise a healthy skepticism toward material generated by tools like this. One use of ChatGPT is as a replacement for a search engine when looking up information. You can Google “how to keep wasps out of my hummingbird feeder” and receive a list of websites, each website giving advice about bird feeder maintenance; alternately, you can ask the same question to ChatGPT and receive a single, succinct summary of tips that it has synthesized from various sources. There is a pernicious draw to attributing authority to information delivered to us by computers.

We should remember, though, that ChatGPT’s answers are only as good as the fallible human-produced text it is trained on. Moreover, for the sake of loving our neighbors, we should bear in mind that tools trained on data will reflect, if not amplify, the biases and prejudices of its input.

Finally, if we use algorithms to manipulate text, we must treat the holy things of God as holy. In the experiment at the beginning of this article, I sampled letters from the text of Pilgrim’s Progress. When I do a similar experiment in one of the courses I teach, some students are curious what would happen if we sample from a book of the Bible. But I believe that is not a respectful use of God’s Word.

Earlier I showed the result of asking ChatGPT to versify part of the Heidelberg Catechism, but I certainly do not advocate using AI-generated texts in congregational singing. One feature of ChatGPT is that it can imitate the style of an author or genre. You could ask ChatGPT to write a story in the style of the Bible, but don’t – that would be blasphemous.

I find the Westminster Larger Catechism’s words about the sixth commandment – that it requires “a sober use of meat, drink, physick, sleep, labour, and recreations” – to be applicable to many other areas. Let’s pray for wisdom to discern the sober use of humanity’s tools in every age.

The author is a member of Bethel Presbyterian in Wheaton, Illinois, and professor of computer science at Wheaton College. This is reprinted with permission of the OPC denomination magazine New Horizons (OPC.org/nh.html) where it first appeared in their January 2024 issue.